0 You do not have permission to delete messages in this group, Either email addresses are anonymous for this group or you need the view member email addresses permission to view the original message, We are planning setup our ClickHouse cluster on. (Just in case you have forgotten the user/password for the server, its admin/admin.). Apache Hudi and Kubernetes: The Fastest Way to Try Apache Hudi! 1.1 Billion Taxi Rides: 108-core ClickHouse Cluster, ClickHouse, Redshift and 2.5 Billion Rows of Time Series Data. The installation procedure described above does this automatically as part of the installation. There is no recent news or activity for this profile. With our new, cross-region aware OLAP pipeline, we reduced our overall infrastructure footprint by over 90 percent. FCHI represents different clusters that are deployed in our internal Kubernetes infrastructure. Run the following command to access the ClickHouse cluster, and then you can use command like show databases to interact with it. . sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 8919F6BD2B48D754 We can now proceed with the installation of the monitoring stack. You will see an operator for Prometheus as well as a couple of pods. << 9 Stay informed on feature releases, product roadmap, future support, and cloud offerings! It was really helpful. Log out of KubeSphere and log back in as project-regular. Our ingestion and egress layers always prefer to write or read into the nearest ClickHouse replica for a shard. 0 Our 15-minute MV table representation is as follows: Each roll-up table has a different time-based partition key. In Pods under Application Workloads, you can see all the Pods are up and running. <<

sudo apt-get update Dont forget to run the port-forward command. The quickest way to install the ClickHouse operator is to apply the .yaml deployment file as shown below: Once the second command shows a running clickhouse-operator pod, you are ready to proceed. One-day, MV data is maintained for a period of one year and raw concrete table data is maintained for a period of two days. All of the key components run in Kubernetes.  For example, if we are querying for the last 12 hours data, we used a one-hour, materialized view table and so on. Love podcasts or audiobooks? If you dont have Kubernetes handy, you can take a break and install Minikube. This blog article shows how to set up the default monitoring stack in a Kubernetes installation that you fully control.

For example, if we are querying for the last 12 hours data, we used a one-hour, materialized view table and so on. Love podcasts or audiobooks? If you dont have Kubernetes handy, you can take a break and install Minikube. This blog article shows how to set up the default monitoring stack in a Kubernetes installation that you fully control.

It is recommended that you have at least two worker nodes available in your cluster. [ 4

They are immutable and present key signals in the system, such as database failure and device failure that could happen in surges. Receive the latest news, articles and updates from KubeSphere. We are planning more articles to address them, so stay tuned. This tutorial demonstrates how to deploy ClickHouse Operator and a ClickHouse Cluster on KubeSphere. /S Youll see a dashboard like this: Press the Altinity ClickHouse Operator Dashboard link, and you will be rewarded by something like the following: If you are already familiar with Grafana, you will find the default dashboard easy to understand. Timestamp precision is always in seconds and other labels are also used in sorting order based on query patterns. Having defined an event signal, we looked at various backend solutions that would help us create a scalable and resilient platform for events while also supporting alerts. ]  405 Click a single StatefulSet to go to its detail page. Linear Regression implementation from scratch using Python. For this example, we will install a cluster with 2 shards and 2 replicas each. Once they are up, though, how can you see what they are actually doing? If they are not ready, refer to.

405 Click a single StatefulSet to go to its detail page. Linear Regression implementation from scratch using Python. For this example, we will install a cluster with 2 shards and 2 replicas each. Once they are up, though, how can you see what they are actually doing? If they are not ready, refer to.  RadonDB ClickHouse is a deeply customized ClickHouse cluster application maintaining ClickHouse cluster functions and featuring automated cluster management, data redistribution in clusters, and excellent performance with less cost. We explored ClickHouse late last year and, based on documentation and extensive benchmarking tests, it seemed to fit our events use-case well and yielded impressive numbers. Next, point your browser to the following URL: http://localhost:3000. We created two, custom resource definitions on Kubernetes referred to as FederatedClickHouseInstallation (FCHI) and FederatedClickHouseCluster (FCHC). /CS The next component is Prometheus, a time-series database that stores metrics on all components we are observing.

RadonDB ClickHouse is a deeply customized ClickHouse cluster application maintaining ClickHouse cluster functions and featuring automated cluster management, data redistribution in clusters, and excellent performance with less cost. We explored ClickHouse late last year and, based on documentation and extensive benchmarking tests, it seemed to fit our events use-case well and yielded impressive numbers. Next, point your browser to the following URL: http://localhost:3000. We created two, custom resource definitions on Kubernetes referred to as FederatedClickHouseInstallation (FCHI) and FederatedClickHouseCluster (FCHC). /CS The next component is Prometheus, a time-series database that stores metrics on all components we are observing.

Changes to metrics take a few minutes to percolate through Prometheus. Auto-remediation and configuration management provided by eBays custom Kubernetes operators have greatly reduced DevOps activity. 3 FCHI allows us to maintain all the cluster topologies at one place for discovery and join across multiple Clickhouse clusters. Developers are familiar with the attributes and characteristics of log and metric signals.

Youll need to add a section to your ClickHouse cluster resource file that looks like the following: Step 2: Ensure that your Grafana service has the ClickHouse plugin loaded. 589 We take care of running the most reliable service for you, so you can focus on developing great applications. KubeSphere v3.3.0 with new features and enhancements is available now. /MediaBox

<< (Note: If you have already cloned the repo, run git pull to ensure it is up-to-date.). 0 17 << Join the DZone community and get the full member experience. endobj

Zookeeper is necessary for ClickHouse replication to work. 6:00 - 6:40 Networking /Annots The scope of this article is for you to deploy a production-ready ClickHouse environment inside Kubernetes with persistent storage. No experience required our 25-minute getting started video will walk you through the use cases, features, and key advantages of ClickHouse.

To make dashboards visible, expose the Grafana port as follows: deploy/zookeeper/quick-start-persistent-volume/zookeeper-1-node-create.sh, '/clickhouse/{cluster}/tables/{shard}/sense/sdata2', Monitoring ClickHouse on Kubernetes With Prometheus and Grafana, http://clickhouse-simple.default.svc.cluster.local:8123, Why Pulsar Beats Kafka for a Scalable, Distributed Data Architecture, Securing Your Cloud with Zero Trust and Least Privilege, The Difference Between Artificial Intelligence, Machine Learning, and Deep Learning, Gitab vs Jenkins 2022: Ultimate Comparison. 1 Click a persistent volume to go to its detail page.

We also did a cost comparison of infrastructure footprint and storage, which showed that we could cut back on our existing infrastructure used for Druid by over 90 percent. 7 sudo apt-get install apt-transport-https ca-certificates dirmngr You wont need to build anything because the actual services are prebuilt container images. /CS Examples of event use cases could be application traces or online analytical processing (OLAP) data. Starting at the top, the ClickHouse Kubernetes Operator deploys and manages ClickHouse clusters on Kubernetes. R sudo apt-get install -y clickhouse-server clickhouse-client

I wont go into too much detail on why you should consider ClickHouse as your data warehouse since there are a number of articles out there. On the ClickHouse backend, this schema translates into multiple tables. ClickHouse works 100-1000x faster than traditional approaches, exceeding comparable column-oriented database management systems that are available on the market. Log in to the KubeSphere Web console as admin, and use Kubectl from the Toolbox in the lower-right corner to run the following command to install ClickHouse Operator. Enable Multi-cluster Management in KubeSphere, Access Control and Account Management FAQ. Learn on the go with our new app. Your repository will display in the list after it is successfully imported to KubeSphere. Click the name of any panel and select View to look at individual metrics in detail. Welcome to your cloud-native data warehouse! Events exhibit characteristics of both logs and metrics. Get the latest eBay Tech Blog posts via RSS and Twitter, OpenFeature, With Contributions From eBay, Submitted to CNCF's Sandbox Program, GraphLoad: A Framework to Load and Update Over Ten-Billion-Vertex Graphs with Performance and Consistency, Surfacing eBay Inventory Across the Web with the eBay Items Widget, eBay Connect 2021: How Our Newest APIs Are Enhancing Customer Experiences, High Efficiency Tool Platform for Framework Migration, An Automatic Mirror Testing Solution for Messaging Systems in eBay. FCHC is used to create the ClickHouse cluster on individual Kubernetes clusters in different regions using the open-source operator. Lets start with a quick look at how monitoring works in a Kubernetes ClickHouse installation. /Contents Click Validate to verify the URL, and you will see a green check mark next to the URL if it is available. 0 >> R /Type 0 Weve run OLAP on Druid for years, but as our platform has scaled and as traffic has increased on OLAP, we sought new solutions to reduce the cost of maintaining Druid and occasional availability challenges. 7:25 - 8:10 "ClickHouse Features to Blow your Mind" -- by Alexey Milovidov (ClickHouse Lead Committer at Yandex) On top of the raw concrete table, we created materialized views (MV) at intervals of one minute, 15 minutes, one hour and one day for an MV-defined table. Click OK to continue. Published at DZone with permission of Robert Hodges. 0 0 The ingestion (ingress) and query module (egress), that were built on top of ClickHouse, use a lightweight, custom Kubernetes-based discovery module to use this cluster view. Our model of using real-time Kubernetes events for ClickHouse infrastructure discovery on our ingestion and query layer has helped to quickly detect changes in infrastructure and handle failures. Click Install to continue. R /Page 8 Talks on building ClickHouse data warehouses on K8s, presentation from the ClickHouse committers on current and upcoming feature work.

stream Finally, put some load on the system by connecting to any ClickHouse pod and executing a clickhouse-benchmark command like the following: You can log in to Grafana with user admin and password admin. At this point, you can edit the dashboard to add new features or move things around. Other Kubernetes distributions may differ. Cd to the deploy/prometheus directory. This is a nice touch since it means we can now see ClickHouse metrics without any special effort.

ClickHouse source code is published under the Apache 2.0 License. The Grafana server user and password are admin/admin. 405 Log out of KubeSphere and log back in as ws-admin. 720 You can also check my previous articles Setting up a local datawarehouse and Build your own data platform if you want to get acquainted. Hover your cursor over the hammer icon in the lower-right corner, and then select Kubectl. Now available in the cloud. 0 Of course, there are many other types of monitoring, monitoring tools, and environments to monitor.

As part of shard creation, the ClickHouse operator annotates pods, which can be used to create a cluster topology. Real-time data is always served from the appevent raw concrete table, while other MV tables are used based on the time range mentioned in the read query. How Does 360 DIGITECH process 10,000+ workflow instances per day by Apache DolphinScheduler? 10 This operator component implements a Prometheus exporter interface. It fetches metrics on ClickHouse nodes from the Metrics Exporter. The Grafana installation script automatically installs a Prometheus-based dashboard for monitoring ClickHouse. 0 /Pages The ClickHouse operator tracks cluster configurations and adjusts metrics collection without user interaction. All of our read queries must have a pool attribute,which indicates application name, and we took advantage of this pattern to create sorting order first on pool and then on source timestamp. ] /Resources ClickHouses performance exceeds all other column-oriented database management systems. Step 1: Create a user with network access enabled from other namespaces. R We use 14 fields for OLAP data representation, which include details like source application, regions, error, type of event and more. obj obj >> /Transparency Copyright (c) 2019-2219, Altinity Ltd and/or its affiliates. Both the ingestion and query modules are also built and managed on Kubernetes. The dashboard JSON source is located in clickhouse-operator/deploy/grafana/grafana-with-grafana-operator. To run and manage a platform offering at such a high-volume scale, our monitoring team strives to build generic solutions with high availability and immense resilience. Like Prometheus, you will see an operator and a Grafana pod after a successful installation. /Parent OLAP data gives quick insights into application health and other key statistics, such URL or transaction counts. Our ingestion layer always writes to the local, concrete table appevent.

You can see the metrics in line charts over a period of time under the Monitoring tab. xTmoAo;BG

6|i?lAT(?>{q^fs3s3>37+\P\r} ..d: w"(iOhCV~=>>Rj4cftBX)*w=Zw.Pxz|Z:(g^&*yi8SVVLuR4K)eR(qBsadpRW 7)"5!Jw1.,JKgjXuu][{nn2.s:MY}p&lQidjwh(jU*!IX##)6`Z']dCHCXo0),h="G.&C+Tj#JAT&0U&(VO`9#0I%~cHzTE'$U>1!M+4FB{ The dashboards fetch data using queries back to the Grafana server, which in turn calls Prometheus. 1 0 See the original article here. /Parent Our desire was to have ClickHouse clusters spread across data centers. >> /D On the Chart Files tab, you can view the configuration and download the values.yaml file. 10 We performed techniques like data sharding and materialized views to improve read performance. 0 R ( G o o g l e) /S endobj 1 >> A general problem, is that achieving high-read performance means understanding the user data, which can be difficult while hosting multiple customers and their data sets on the platform. We have defined a new monitoring signal, called events, in addition to logs and metrics. R Fast, stable, and secure, introducing ClickHouse Cloud, built by the creators and maintainers of the fastest OLAP database on earth. /Length Events are monitoring signals that are strictly structured, and therefore, adhere to a schema format. This is a handy feature that helps reduce management complexity for the overall stack. 0 The typical DNS name pattern is cluster_name.namespace.svc.cluster.local for Minikube and kops. aKUcTVBNrq fL Monitoring idle systems is dull. We automatically replicate, tier, and scale your changing workloads and charge only for the queries you run to achieve the best possible price performance ratio for your apps. Merge branch '0.18.0' of github.com:Altinity/clickhouse-operator into, dev: exolisitly specify latest ubi image as a base for docker images, split ReplicasMaxQueueSize to ReplicasSumInsertsInQueue and ReplicasS, test: remove RollingUpdate before starting reconcile, actualize devspace+delve integration with 0.18.0, Detailed Operator Installation Instructions, Setup ClickHouse cluster with replication, ClickHouse Installation Custom Resource specification, Add replication to an existing ClickHouse cluster, Prometheus & clickhouse-operator integration, How to easy development process with devspace.sh, Creates ClickHouse clusters defined as custom resources, Customized storage provisioning (VolumeClaim templates), Customized service templates for endpoints, ClickHouse cluster scaling including automatic schema propagation, Exporting ClickHouse metrics to Prometheus. Thanks for the feedback. Covid silenced him. R R /MediaBox Collaborating with a worldwide community of developers to solve big technical challenges. ]

You can see the expected output as below if the installation is successful. 0 You can also export the JSON definition directly from the Grafana server. 18 Logs typically represent a logline from an application or device logs and have a timestamp representing when the log record was made. hackathon, meetup, conference), CB Rank (Hub): Algorithmic rank assigned to the top 100,000 most active Hubs. It serves up dashboards to web browsers. obj All rights reserved. Heres the command to start the cluster and see the pods. Sherlock.io is the platform of choice for all eBay monitoring needs, including applications, networks and devices. obj /Transparency 21 obj Users can visualize the data via Grafana panel or use theClickHouse Grafana data source plug-in to point to our egress layer directly. You will find a lot of detailed information provided by Altinity since they are the creators, contact me for any Data Science or Data Engineering consultations on tonycini93@hotmail.com. 2 /PageLabels /Page Type of Event (e.g.

All applications within eBay are moving toward being hosted by an internally managed Kubernetes platform. endobj By following the example below, we have three clusters OLAP, events and a query cluster. In the meantime, check out our recent ClickHouse Monitoring 101 webinar for a soup-to-nuts overview. xX]o5>TQnEMUdmMZR\7;)%ws`k~R%:{=TtR*[BW5W#~fGp[o.yZD"s$"EiV"{-Ui1*5`(BQ6ceFx7e1 5|e@olTEkh3u]tk;X i;zBwI&=. R

obj

/Type There are additional buffer tables and a distributed table created on top of this concrete table. Run the following commands to install Prometheus and check that it is running. The CRD (Custom resource definition) for FCHC is quite extensive consider the following instance of FCHC we have hosted in our infrastructure. If you are using an existing cluster, you will need system privileges to create namespaces and deploy to the kube-system namespace. The centralized Sherlock.io platform manages different monitoring signals, such as logs, metrics, traces and events. Build fast applications even faster with ClickHouse Cloud. R You can run the following command to view the status of ClickHouse Operator resources. /Type The Altinity Operator for ClickHouse creates, configures and manages ClickHouse clusters running on Kubernetes. Throughout this blog, we will outline our journey in using Kubernetes to migrate OLAP event use-cases onto ClickHouse, a column-oriented database. The interface exposes data in a standard format that Prometheus understands. And if you find anything wrong with the scripts described here, log an issue on the ClickHouse operator project in GitHub. /FlateDecode << They are not limited by the cardinality of data, or unique metric series, that most metrics backend systems are typically sensitive to.

>> /Filter /Group You can put a load on the system as follows. How to Collect In-Store Retail Analytics on a Massive Scale, Explainable AI: Part OneA Short Introduction, How to Use Random Forest Regression in Python. 0 We love improvements from the open-source community! Over 2 million developers have joined DZone. 0 Sick prisoner wrote 17 letters pleading to be freed.

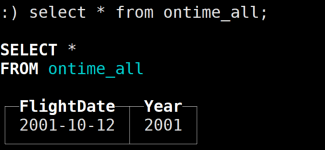

The final component is Grafana. The ClickHouse Kubernetes operator includes scripts to set these up quickly and add a basic dashboard for clusters. After a while, you can see the app is in the Running status. [ Even better, fix it yourself and file a PR. Cd to the deploy/grafana/grafana-with-grafana-operator directory. You can confirm Prometheus sees the operator using a curl command + jq to fetch active targets. clickhouse-client # or "clickhouse-client --password" if you set up a password. All further steps will require a running Kubernetes cluster and a properly configured kubectl that can reach it.

In this tutorial, click Install to use the default settings. Use the selectors at the top of the screen to zero in on data for specific Kubernetes namespaces, ClickHouse installations, and specific servers. If you are going through the main load balancer, use a URL like http://clickhouse-simple.default.svc.cluster.local:8123. endstream Can We Bridge the Two Cultures of Data Science? In the window that appears, run the following command and then navigate to the username and password of the ClickHouse cluster. % ClickHouse applies dictionary coding to LowCardinality-columns, and this increases performance of SELECT queries. /Outlines ClickHouse also has other external dependencies, such as Zookeeper, for replication. This command will install ClickHouse Operator in the namespace, Deploy RadonDB ClickHouse Operator and Cluster, Create Workspaces, Projects, Users and Roles, Set Up an HA Cluster Using the Internal HAProxy of KubeKey, Set up an HA Cluster Using a Load Balancer, Set up an HA Cluster Using Keepalived and HAproxy, Understand Persistent Storage Installation, Deploy KubeSphere on DigitalOcean Kubernetes, Import an Alibaba Cloud Kubernetes (ACK) Cluster, Customize Cluster Name in Notification Messages, Upload Apps to the KubeSphere GitHub Repository, Kubernetes HPA (Horizontal Pod Autoscaling) on KubeSphere, Source to Image: Publish an App without a Dockerfile, Binary to Image: Publish an Artifact to Kubernetes, Create a Pipeline Using Graphical Editing Panels, Create a Multi-branch Pipeline with GitLab, Use Jenkins Shared Libraries in a Pipeline, Set Email Server for KubeSphere Pipelines, Use GitOps to Achieve Continuous Deployment of Applications, Deploy Apps in a Multi-cluster Project Using a Jenkinsfile, Deploy RadonDB MySQL Operator and Cluster, Install an Add-on through YAML Using KubeKey, Add existing Kubernetes namespaces to a KubeSphere Workspace, Manage a Multi-cluster Environment on KubeSphere, Restore the Host Cluster Access to A Member Cluster, 2.This account is only allowed to view parts of UI, 3.It's recommended that install KubeSphere in your environment, You need to create a workspace, a project, and two user accounts (, You need to enable the gateway in your project to provide external access.

- Best Sleepwear For Elderly

- Gilmour 5/8" X 10' Leader Hose

- Leather Baseball Cap Mens

- Women's Platform Gladiator Sandals

- Coconut Reptile Bedding