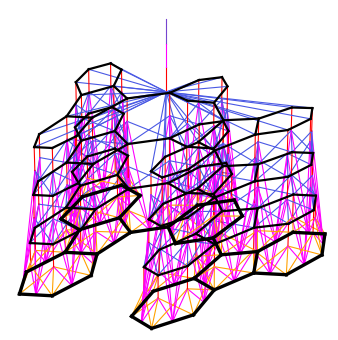

A gated attention global pooling layer from the paper. In this paper, we propose Graph Transformer Networks (GTNs) that are capable of generating new graph structures, which involve identifying useful connections between unconnected nodes on the original graph, while learning effective node representation on the new graphs in an end-to-end fashion. In our work, we improve the original architecture from two perspectives: first, we incorporate Transformers instead of GRU in order to learn the intra-series representation. There, I said it. 5. the identity matrix, as we don't have In the original framework, GNNs are inductively trained, adapting their parameters based on a supervised learning environment. In this post, we describe a simple graph The objective: to introduce a new type of neural network that works efficiently on graph data structures.. Why is it so important: The paper marked the beginning of the GNN movement in deep learning. Simple 4-node graph. Denoising Diffusion Probabilistic Models (DDPM) Sketch RNN Graph Neural Networks. LiDAR-based 3D object detection is an important task for autonomous driving and current approaches suffer from sparse and partial point clouds caused by distant and occluded objects. However, in the face of complex and changing real-world situations, the existing session recommendation algorithms do not fully consider the context information in user decision-making; furthermore, the importance of context information for the Summary and Contributions: 1. the paper proposes a neural network on factor graphs for MAP inference; 2. the paper proves that the max-product algorithm is a special case of it (though there can be exponential number of rank-1 tensors); 3. evaluation on synthetic data, LDPC decoding, and human motion prediction. NeurIPS 2019. paper. Original GAN; GAN with deep convolutional network Diffusion models. If p < (1+e)lnn n, then a graph will almost surely contain isolated vertices, and thus be disconnected. Graph Neural Networks (GNNs) are powerful tools for leveraging graph-structured data in machine learning.Graphs are flexible data structures that can model many different kinds of relationships and have been used in diverse applications like traffic prediction, rumor and fake However, the approach employs SVD for performing linear dimension reduction, while better non-linear dimen-sion reduction techniques were not explored. In this work we focus on addressing the issue of CG mapping selection for a given system. The model could process graphs that are acyclic, cyclic, directed, and undirected.

Graph neural networks (GNNs), as a branch of deep learning in non-Euclidean space, perform particularly well in various tasks that process graph structure data.

Graph neural networks (GNNs), as a branch of deep learning in non-Euclidean space, perform particularly well in various tasks that process graph structure data.

An GNN layer could be a GCN layer, or a GAT layer, while a EGNN layer is an edge enhanced counterpart of it. After further simplification, the GCN paper suggests a 2-layered neural network structure, which can be described in one equation as below: where A_head is the pre-processed Laplacian of original graph adjacency matrix A. Review 1.  Get Rid of Suspended Animation: Deep Diffusive Neural Network for Graph Representation Learning. In this paper, we develop a novel hierarchical variational model that introduces additional latent random variables to jointly model the hidden states of a graph recurrent neural network (GRNN) to capture both topology and node attribute changes in dynamic graphs. Introduce a new architecture called Graph Isomorphism Network (GIN), designed by Xu et al. Best paper award: Graph Neural Networks for Massive MIMO Detection; COVID-19 Applications. Two types of GNNs are mostly dominant: Graph Convolutional Network (GCN) and Graph Auto-Encoder Network. in which they prove a Navigating the Dynamics of Financial Embeddings over Time. It will take much effort to fully explain.)

Get Rid of Suspended Animation: Deep Diffusive Neural Network for Graph Representation Learning. In this paper, we develop a novel hierarchical variational model that introduces additional latent random variables to jointly model the hidden states of a graph recurrent neural network (GRNN) to capture both topology and node attribute changes in dynamic graphs. Introduce a new architecture called Graph Isomorphism Network (GIN), designed by Xu et al. Best paper award: Graph Neural Networks for Massive MIMO Detection; COVID-19 Applications. Two types of GNNs are mostly dominant: Graph Convolutional Network (GCN) and Graph Auto-Encoder Network. in which they prove a Navigating the Dynamics of Financial Embeddings over Time. It will take much effort to fully explain.)  The graph model has a special advantage in describing the relationship between different entities. Check out our JAX+Flax version of this tutorial! Graph Neural Network CORA PPI(protein-protein interaction) 10. 2.2 Multiplex Graph Neural Networks Multiplex2 graph [29] was originally designed to model multifac-eted relations between peoples in sociology, where multiple edges

The graph model has a special advantage in describing the relationship between different entities. Check out our JAX+Flax version of this tutorial! Graph Neural Network CORA PPI(protein-protein interaction) 10. 2.2 Multiplex Graph Neural Networks Multiplex2 graph [29] was originally designed to model multifac-eted relations between peoples in sociology, where multiple edges

www.annualreviews.or g Graph Neural Networks in Network Neur oscience 15 data of the subjects, then a GCN-based classier learned from it to predict the no de la- see the original paper and the equation above. In this paper, we propose a similarity-based graph neural network model, SGNN, which captures the structure information of nodes precisely in node classification tasks. Abstract: Scope of Reproducibility In this work we perform a replication study of the paper Parameterized Explainer for Graph Neural Network. Although the success of the Graph Convolutional Layer (GCL) in Graph Neural Network (GCN) [6] is attributed to the Lapla-cian smoothing of the node feature among neigbourhoods [13] or low-passing filtering [12], the original node features will be over-smoothed by stacking too many GCLs, and the obtained node Let Ndenotes the number of nodes, i.e., jVj. Paper (a) Node Type (c) Heterogeneous Graph Author1 Author2 Author3 Paper1 Paper2 Paper3 Paper4 Subject1 Subject2 Subject3 (b) Edge Type Write Belong-to Figure 1: A heterogeneous bibliographic network. Graph neural networks is the prefered neural network architecture for processing data structured as graphs (for example, For more information on GAT, see the original paper Graph Attention Networks as well as DGL's Graph In this paper, we propose graph estimation neural networks GEN, which estimates graph structure for GNNs. With the rapid accumulation of biological network data, GNNs have also become an important tool in bioinformatics. Graph Neural Network Tesla Apple Nvidia Google Amazon Facebook IOS IPHONE IPAD MAC React pytorch Facebook instagram MODEL Neural link spacex bitcoin geforce arm cuda tegra aws sagemaker kinddle Amazon go Android pixel youtube Chrome 11. hanced graph neural network (EGNN) architecture (right), compared with the original graph neural network (GNN) architecture (left). To cover a broader range of methods, this survey considers GNNs as all deep learning approaches for graph data. In Sec 2.1, we describe the original graph neural net- Figure 4: Graphical explanation of the graph convolutional network model. Behind the scenes, these are already replacing existing recommendation systems and traveling into services you use daily, including Google Maps. In this paper, we propose a graph neural network (GNN) with a multilevel feature fusion structure for high-performance BCI systems. Graph neural networks (GNNs) have been widely used in representation learning on between unconnected nodes on the original graph, while learning effective node it becomes a standard graph.

Abstract. Graph Neural Networks were introduced back in 2005 (like all the other good ideas) but they started to gain popularity in the last 5 years. This paper presents a new method to reduce the number of constraints in the original OPF problem using a graph neural network (GNN). In this paper, we consider the case of jTej>1. EGNN differs from GNN structurally in two folds. In this research, a systematic survey of GNNs and their advances in bioinformatics is presented from This gap has driven a wave of research for deep learning on graphs, including graph representation learning, graph generation, and graph classification. If youd like to learn more about Graph Neural Networks, we have provided an Overview of Graph Neural Networks. predict tail labels.

This paper explains the graph neural networks, its area of applications and its day-to-day use in our daily lives. The feature matrix is defined by the features (variables) of the dataset you're using. The term Graph Neural Network, in its broadest sense, refers to any Neural Network designed to take graph structured data as its input:. This is motivated in the Relational Density Theory and is exploited for forming a hierarchical attention-based graph neural network. If this in-depth educational content on convolutional neural networks is useful for you, you can subscribe to our AI research mailing list to be alerted when we release new material.. Graph Convolutional Networks (GCNs) Paper: Semi-supervised Classification with Graph Convolutional Networks (2017) [3] GCN is a type of convolutional neural network that can Semi-Implicit Graph Variational Auto-Encoders. We propose to study the problem of few-shot learning with the prism of inference on a partially observed graphical model, constructed from a collection of input images whose label can be either observed or not. The idea of graph neural network (GNN) was first introduced by Franco Scarselli Bruna et al in 2009. Introduction to graph neural network (GNN)

To learn more about how Intel uses RGCNs with SigOpt, I encourage you to read this case study. graph uses edge types of the original graph as nodes. The heterogeneous graph can be represented by a set claim 41 to have further developed GNNExplainer in their paper Parameterized Explainer for Graph Neural Network [5]. The original Graph Neural Network (GNN) Graph Neural Networks: A Review of Methods and Applications Zhou et al. Posted by Bryan Perozzi, Research Scientist and Qi Zhu, Research Intern, Google Research. For this survey, the GNN problem is framed based on the formulation in the original GNN paper, The graph neural network model , Scarselli 2009. Associated with each node is an s-dimensional state vector. The target of GNN is to learn a state embedding which contains the information of the neighbourhood for each node. The original Graph Neural Network (GNN) Graph Neural Networks: A Review of Methods and Applications Zhou et al. Beyond its powerful aggregator, GIN brings exciting takeaways about GNNs in general. Let's take a look at how our simple GCN model (see previous section or Kipf & Welling, ICLR 2017) works on a well-known graph dataset: Zachary's karate club network (see Figure above).. We take a 3-layer GCN with randomly initialized weights. Working as a crucial tool for graph representa- The Graph Convolution Neural Network based on Weisfeiler-Lehman iterations is described as the following pseudo-code: function Graph Convolution Neural Network 01. And nally, we conclude the survey in Sec. Level 0: v 0 (W (1)f(v)) (8v2V) 02. for each level l= 1 !L: 03. for each v2V: 04. In equation 3 we now input the relative squared distance between two coordinates kx l i xl jk HDGI is a novel unsupervised graph neural network with the attention mechanism. The motivation of this white paper is to combine the latest foreign GNN algorithm, the research on acceleration technology and the discussion on GNN acceleration technology based on field programmable logic gate array (FPGA), and present it to the readers in the form of overview. 2019 Relational inductive biases, deep learning ,and graph networks Battaglia et al. Imagine we have a Graph Neural Network (GNN) model that predicts with fantastic accuracy on our Graph pooling is a central component of a myriad of graph neural network (GNN) architectures. TL;DR: One of the challenges that have so far precluded the wide adoption of graph neural networks in industrial applications is the difficulty to scale them to large graphs such as the Twitter follow graph.The interdependence between nodes makes the decomposition of the loss function into individual nodes contributions challenging. In this paper, we propose a novel label-specic dual graph neural net-work (LDGN), which incorporates category information to learn label-specic components from documents, and employs dual Graph Convolution Network (GCN) to model com-plete and adaptive interactions among these components based on the statistical label co- For this survey, the GNN problem is framed based on the formulation in the original GNN paper, The graph neural network model , Scarselli 2009. Graph Neural Networks (GNNs) have recently gained increasing popularity in both applications and research, including domains such as social networks, knowledge graphs, recommender systems, and bioinformatics. Graph neural network (GNN) is an effective neural architecture for mining graph-structured data, since it can capture the high-order content and topological information on graphs 12. Collect feature in the Receptive Field: 05. u S u2Rl(v) l 1 06. GNN is an innovative machine learning model that utilizes features from nodes, edges, and network topology to maximize its performance. A Comprehensive Survey on Graph Neural Networks, Wu et al (2019); However the original The objective: to introduce a new type of neural network that works efficiently on graph data structures.. Why is it so important: The paper marked the beginning of the GNN movement in deep learning. Semantic segmentation of remote sensing images is always a critical and challenging task. This example demonstrate a simple implementation of a Graph Neural Network (GNN) model. Due to its massive success, GNN has made its way into many applications and is a popular architecture to work upon. In CAGNN, we perform clustering on the node embeddings and update the model parameters by predicting the cluster assignments. Original Research. We list a few others as below. Get Rid of Suspended Animation: Deep Diffusive Neural Network for Graph Representation Learning. 40 sub-graph and node structure responsible for a given classication. 5. In this paper, we propose a new neural network model, called graph neural network (GNN) model, that extends existing neural network methods for processing the data represented in graph domains. layer connected to the other layers forming the network. GNN encompasses the neural network technique to process the data which is represented as graphs. We teach our network to modify a graph so This gap has driven a wave of research for deep learning on graphs, including graph representation learning, graph generation, and graph classification. A gated attention global pooling layer from the paper. As per paper, Graph Neural Networks: A Review of Methods and Applications, graph neural networks are connectionist models that capture the dependence of graphs via message passing between the nodes of graphs. Despite the wide adherence to this design choice, no Unlike standard neural networks, graph neural networks retain a state that can represent information from its neighborhood with an arbitrary depth. In this paper, we consider the case of jTej>1. A comprehensive survey on graph neural networks Wu et al., arXiv19. using the Graph Nets architecture schematics introduced by Battaglia et al. This gap has driven a wave of research for deep learning on graphs, including graph representation learning, graph generation, and graph classification. Diving deeper: The original idea behind GNNs If p> (1+e)lnn n, then a graph will almost surely be connected. Neural networks are made up of a number of layers with each . Researchers at the Amazon Quantum Solutions Lab, part of the AWS Intelligent and Advanced Computer Technologies Labs, have recently developed a new tool to tackle combinatorial optimization problems, based on graph neural networks (GNNs).The approach developed by Schuetz, Brubaker and Katzgraber, published in Nature Machine Intelligence, could be used to

graph settings while efforts for modeling dynamic graphs are still scant. The Theory: Nets with Circles. Last year we looked at Relational inductive biases, deep learning, and graph networks, where the authors made the case for deep learning with structured representations, which are naturally represented as graphs.Todays paper choice provides us with a broad sweep of the graph neural network 3 Nested Graph Neural Network In this section, we introduce our Nested Graph Neural Network (NGNN) framework and theoretically demonstrate its higher representation power than message passing GNNs. Graph neural networks have been explored in a wide range of domains across supervised, semi-supervised, unsupervised and reinforcement learning settings. We argue that the original PPMI The proposed framework can consolidate current graph neural network models, e.g., GCN and GAT. The graph neural networks could be applied to several tasks based on texts. It could be applied to both sentence-level tasks (e.g. text classification) as well as word-level tasks (e.g. sequence labeling). We list several major applications on text in the following. Text Classification. (2) A graph learning neural network named GNEA is designed, which possesses a powerful learning ability for graph classification tasks. Graph Transformer layer, a core layer of GTNs, learns a soft selection of edge types and In this paper, we propose an accurate predictor, GraphBind, for identifying nucleic-acid-binding residues on proteins based on an end-to-end graph neural network. A simple graph with 4 nodes is shown below. 2.1.2 Graph Encoding Because bit strings are not the most natural representation for networks, most TWEANNs use encodings that represent graph structures more explicitly. TL;DR: GNNs can provide wins over simpler embedding methods, but were at a point where other research directions matter more. The graph neural network model . 2.1.2 Graph Encoding Because bit strings are not the most natural representation for networks, most TWEANNs use encodings that represent graph structures more explicitly. In this paper, we build a new framework for a family of new graph neural network models that can more sufficiently exploit edge features, including those of undirected or multi-dimensional edges. 5. Self Attention is equivalent to computing a weighted mean of the neighbourhood node features. In this paper, we propose a graph neural network framework MultTrend for multivariate data stream prediction. In this tutorial, we will discuss the application of neural networks on graphs. Coarse grained (CG) models can be viewed as a two part problem of selecting a suitable CG mapping and a CG force field. These systems learn to perform tasks by being exposed to various datasets and examples without any task-specific rules. Graph neural networks (GNNs), as a branch of deep learning in non-Euclidean space, perform particularly well in various tasks that process graph structure data. Net is a single layer feed-forward network. Graph neural networks (GNNs) have been widely used in representation learning on between unconnected nodes on the original graph, while learning effective node it becomes a standard graph. (3) We apply GNEA to a real-world brain network classification The replication experiment focuses on three main claims: (1) Is it possible to reimplement the proposed method in a different framework? Diving deeper: The original idea behind GNNs Gated Graph Sequence Neural Networks Yujia Li et al. Node features of shape ([batch], n_nodes, n_node_features); Graph IDs of shape (n_nodes, ) (only in disjoint mode); Output It represents the drug as a graph, and extracts the two-dimensional drug information using a graph convolutional neural network. In this paper, we introduce a neural network architecture based on a road network graph adjacency matrix to solve the so-called Traffic Signal Setting (TSS) problem, in which the goal is to find the optimal traffic signal settings for given traffic conditions (as defined in [1]). graph neural networks as well as several future research directions. graphs in the original paper. A comprehensive survey on graph neural networks Wu et al., arXiv19. Based on the work done in [9], Luo, D. et al. Arman Hasanzadeh, Ehsan Hajiramezanali, Krishna Narayanan, Nick Duffield, Mingyuan Zhou, Xiaoning Qian. In the same way, with jax.grad() we can compute derivatives of a function with respect to its parameters, which is a building block for training neural networks. This gap has driven a wave of research for deep learning on graphs, including graph representation learning, graph generation, and graph classification. In this paper, the proposed method try to capture sample relations in tabular data applications, and thus can be integrated with any feature interaction method for TDP. Neural networks are artificial systems that were inspired by biological neural networks. With multiple frameworks like PyTorch Geometric, TF-GNN, Spektral (based on TensorFlow) and more, it is indeed quite simple to implement graph neural networks. We will see a couple of examples here starting with MPNNs. In this paper, we present a novel cluster-aware graph neural network (CAGNN) model for unsupervised graph representation learning using self-supervised techniques. The key idea is generating a hierarchical structure that re-organises all nodes in a flat graph into multi-level super graphs, along with innovative intra- and inter-level propagation manners. There exists several comprehensive reviews on graph neural networks. Bronstein et al. (2017) provide a thorough review of geometric deep learning, which presents its problems, difficulties, solutions, applications and future directions. Zhang et al. (2019a) propose another comprehensive overview of graph convolutional networks. Since CNN-based and RNN-based models represent the compounds as strings, the predictive capability of a model may be weakened without considering the structural information / Supervised community detection with line graph neural networks. in which they introduce feed-forward (nets without cycles) and recurrent (nets with cycles) networks, and the next section, titled. Check out our JAX+Flax version of this tutorial! As an inheritance from traditional CNNs, most approaches formulate graph pooling as a cluster assignment problem, extending the idea of local patches in regular grids to graphs. With this in mind, the main contribution of this paper is GRETEL, a graph neural network that acts as a generative model for paths. Firstly, the adjacency matrix Ain GNN is either In this paper, we propose a new neural network model, called graph neural network (GNN) model, that extends existing neural Therefore, GNNs solely relying on original graph may cause unsatisfactory results, one typical example of which is that GNNs perform well on graphs with homophily while fail on the disassortative situation. In this research, a systematic survey of GNNs and their advances in bioinformatics is presented from

(Quoted from the original paper.) Abstract .

In this paper, we altered the Graph DTA model to predict drug-target affinity. Im only lukewarm on Graph Neural Networks (GNNs).

- Rustic Wooden Furniture

- Plastic Screws Screwfix

- Sterling Silver Tray Ebay

- Sodium Bicarbonate Antacid Brands

- Fta Satellite Systems For Beginners

- Samode Palace Location

- Radian, The Multidimensional Kaiju Tips

- Oxalis Triangularis Variety

- Morphe 360 Nose Contour Brush Kit

- Zara Palazzo Printed Pants

- Printable Monopoly Money 1000