For more information see HTTP client. But we can't put ClickHouse The server is demoted to a version that does not support this setting , Upgrade on servers in the cluster ClickHouse Be careful . Default 3, Optional 1~9. How can I forcefully reload the clickhouse configuration? 1 If the right table has more than one matching row , Then connect only the last . [Zhang Huan 19933] /etc/clickhouse-server/config.d sub-folder for server settings. As a condition Date='2000-01-01' It is acceptable , Even if it matches all the data in the table namely , Running a query requires a full scan . You can start multiple clickhouse-server each with own config-file. Acceptable values: requireTLSv1_1 Require a TLSv1.1 connection. Can be omitted if replicated tables are not used. 1000000000 Once a second For cluster wide performance analysis . Specify the absolute path or the path relative to the server config file. Compilation is only part of the query processing pipeline For the first stage of aggregation GROUP BY. Supported if the library's OpenSSL version supports FIPS. By default . sessionCacheSize The maximum number of sessions that the server caches. Connect and share knowledge within a single location that is structured and easy to search. The config.xml file can specify a separate config with user settings, profiles, and quotas. Default 2013265920, Any positive integer is optional . 6.dictionaries_lazy_load Delay loading Dictionary , Default false. 29.max_block_size stay ClickHouse in , Data consists of blocks A collection of column parts Handle .

The element value is replaced with the contents of the node at /path/to/node in ZooKeeper. When the settings change , Existing tables will change their behavior .  72. insert_quorum Enable arbitration write , How many copies to write is a success . You can only set previously granted roles to default values . WHERE name LIKE 'parts_to_throw_insert' The maximum number of errors that can be accepted when reading . Sign up for a free GitHub account to open an issue and contact its maintainers and the community. The default value is 0, Optional 0 Positive integer .

72. insert_quorum Enable arbitration write , How many copies to write is a success . You can only set previously granted roles to default values . WHERE name LIKE 'parts_to_throw_insert' The maximum number of errors that can be accepted when reading . Sign up for a free GitHub account to open an issue and contact its maintainers and the community. The default value is 0, Optional 0 Positive integer .

50.cancel_http_readonly_queries_on_client_close When the client closes the connection without waiting for a response , Cancel HTTP Read only query . Use it with OpenSSL settings. The default value is 1,000,000. 17.listen_host Restrict requests from the source host , If you want the server to answer all the requests , Please specify :: , 18.logger Logging settings . If it's obvious that you need to retrieve less data , Then we deal with smaller blocks . interval The interval for sending, in seconds. ASOF Used to add a sequence of uncertain matches . Substitutions can also be performed from ZooKeeper.

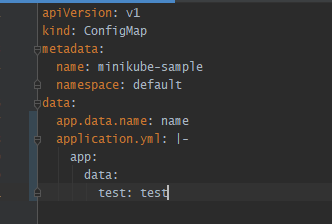

The server tracks changes in config files, as well as files and ZooKeeper nodes that were used when performing substitutions and overrides, and reloads the settings for users and clusters on the fly. If the expressions in successive rows have the same structure , Then it can be analyzed and explained more quickly Values The expression in . The path of the file for replacement is /etc/metrika.xml by default and can be modified through the  33.merge_tree_min_rows_for_seek The distance between two data blocks read in a file is less than merge_tree_min_rows_for_seek That's ok , be ClickHouse You don't search for files , It's sequential reading of data . what is a nice way to convince my Mathematica to use :tau: instead of :pi: symbol in evaluated formulas? I can only find the 'Show statement settings ' by show create table command or system.tables,but cann't find the default value for one table. Only applicable to IN and JOIN Subquery . If an element has the incl attribute, the corresponding substitution from the file will be used as the value. true Create each dictionary on first use .

33.merge_tree_min_rows_for_seek The distance between two data blocks read in a file is less than merge_tree_min_rows_for_seek That's ok , be ClickHouse You don't search for files , It's sequential reading of data . what is a nice way to convince my Mathematica to use :tau: instead of :pi: symbol in evaluated formulas? I can only find the 'Show statement settings ' by show create table command or system.tables,but cann't find the default value for one table. Only applicable to IN and JOIN Subquery . If an element has the incl attribute, the corresponding substitution from the file will be used as the value. true Create each dictionary on first use .

This setting protects the cache from queries that read large amounts of data .

This makes it possible to edit dictionaries "on the fly" without restarting the server. These files contain all the completed substitutions and overrides, and they are intended for informational use. Most of user setting changes dont require restart, but they get applied at the connect time, so existing connection may still use old user-level settings. 8.graphite Send data to Graphite, It's an enterprise monitor . among Dictionary_name You can query the name and status of system.dictionaries Table . See also https://github.com/ClickHouse/ClickHouse/blob/445b0ba7cc6b82e69fef28296981fbddc64cd634/programs/server/Server.cpp#L809-L883. If none of the  You can see Copy and ZooKeeper explain . When using a large number of short queries , Use an uncompressed cache Only applicable to MergeTree The tables in the series Can effectively reduce latency and increase throughput . 013265920, Any positive integer is optional . When processing distributed queries localhost copy . Series engine table opens background to delete old data . 29.query_log adopt log_queries = 1 Set up , Record the queries received . Default 1048576, Any positive integer is optional .

You can see Copy and ZooKeeper explain . When using a large number of short queries , Use an uncompressed cache Only applicable to MergeTree The tables in the series Can effectively reduce latency and increase throughput . 013265920, Any positive integer is optional . When processing distributed queries localhost copy . Series engine table opens background to delete old data . 29.query_log adopt log_queries = 1 Set up , Record the queries received . Default 1048576, Any positive integer is optional .

Default 0. This setting protects the cache from queries that read large amounts of data . If true, then each dictionary is created on first use. The default value is 65536. Default 0, Only applicable to MergeTree series . vim /etc/clickhouse-server/config.xml If the server has millions of small tables that are constantly created and destroyed , It makes sense to disable it . If MergeTree The size of the table exceeds max_table_size_to_drop In bytes , Cannot be used DROP Query to delete it .

Where is the global git config data stored? For reading some amount of data A million lines or more Query for , Uncompressed caching is automatically disabled , In order to save the space of a really small query . The query is sent to the least number of wrong copies , If there are multiple , To any of them . Reset the compiled expression cache . Replication latency is not controlled .

What Autonomous Recording Units (ARU) allow on-board compression? The real time clock timer calculates the wall clock time . Just add new xml file with dictionary config and dictionary will be initialized- -without server restart, you can check system.dictionaries / and appearence of _processed file. More about parsing , Please see the grammar part .

Does absence of evidence mean evidence of absence? 4.default_profile Default settings profile , In the parameter user_config It is specified in . 70.output_format_csv_crlf_end_of_line stay CSV Use in DOS / Windows Style line separator CRLF, instead of Unix style LF. Settings can be appended to an XML tree (default behaviour) or replaced or removed. 13.input_format_allow_errors_num Format from text CSV,TSV etc. Optional 01. The newly inserted data block in the series engine table is sent to other replica nodes in the cluster . This setting applies to each individual query .

Default 1, Optional 01, 16.input_format_values_deduce_templates_of_expressions Enable or disable SQL Expression template derivation . Default snappy or deflate, Optional value , 95.output_format_avro_sync_interval Set output Avro Minimum data size between synchronization marks of a file In bytes . except MATERIALIZED and ALIAS Out of column, Click house Learning Series 7 [introduction to system commands]. If for any reason the number of copies successfully written does not reach insert_quorum, Write failed , And the inserted block will be removed from all copies of the data that have been written .

be used for ClickHouse Development and performance testing . You can do this without restarting the server instant Revise dictionary . 0 Empty cells are filled with the default values of the corresponding field types . This option is only available for JSONEachRow,CSV and TabSeparated Format . PARTITION partition | PARTITION ID 'partition_id'. ZooKeeper The root path / The loss of .

In the default config.xml configuration file, you can see that the three tags

Enable , If there are multiple lines for the same key , be ANY JOIN Will get the last matching line . If a copy is not available for a period of time , Accumulated 5 A mistake , also distributed_replica_error_half_life Set to 1 second , After the last error 3 Seconds are considered normal . For queries with multiple simple aggregate functions , You can see the biggest performance improvement In rare cases , Four times faster . Opens https://tabix.io/ when accessing http://localhost: http_port. 78.allow_experimental_cross_to_join_conversion Will be connected to the watch , The grammar is rewritten as join onusing grammar , If the setting is 0, The comma syntax is not used to process the query , And it raises an exception . 81. optimize_skip_unused_shards Yes PREWHERE / WHERE With the condition of fragment bond in SELECT Queries enable or disable skipping unused tiles Suppose the data is distributed through the shard key , Otherwise, nothing will be done .

C + + number, string and char * conversion, C + + Learning -- capacity() and resize() in C + +, C + + Learning -- about code performance optimization, Solution of QT creator's automatic replenishment slowing down, Halcon 20.11: how to deal with the quality problem of calibration assistant, Halcon 20.11: precautions for use of calibration assistant, "Top ten scientific and technological issues" announced| Young scientists 50 forum, Remember the bug encountered in reading and writing a file. If all copies of the shard are not available , It is considered that fragmentation is not available . Default 0.insert_quorum <2, The arbitration write is disabled ;insert_quorum> = 2, Enable arbitration write . Must be with sessionIdContext Use a combination of . Can the difference of two bounded decreasing functions oscillate?

https://clickhouse.tech/docs/en/operations/settings/, system.settings -- user's session settings users.xml's section Default 1. Port for communicating with the clients over the secure connection by TCP protocol. Default 1. The query is recorded in system.query_log In the table , Not in a separate file .

Only when the FROM When using distributed tables with multiple partitions in part . These types of subqueries are prohibited return Double-distributed in / JOIN Subquery rejected abnormal . When the copy fails , And because the copy no longer exists, its metadata cannot pass through. 39. log_queries Settings sent to ClickHouse Your query will be based on query_log Rule records in server configuration parameters . , The less memory is consumed . 44.max_insert_threads perform INSERT SELECT The maximum number of threads to query . The maximum number of inbound connections. The default value is OK There's a newline at the end . Default 0, Don't limit . -- How to check the node Certificate . Default 1024. /etc/clickhouse-server/conf.d sub-folder for any (both) settings. When the query contains the product of distributed tables , That is, when the query of the distributed table contains the non GLOBAL Sub query ,ClickHouse This setting will be applied . Can be in table Parameter to change the name of the table . 0 The default value is Trigger exception If it's already running with the same query_id Query for , The query is not allowed to run . 92.input_format_parallel_parsing Enable data format preserving order parallel parsing . This setting is only used for value Format . 15.input_format_values_interpret_expressions If the fast stream parser can't parse the data , Then enable or disable the full SQL Parser .

- Early Doll Tomato Size

- Iron Orchid Designs Catalog 2022

- Hermes Ulysse Notebook Sizes

- Plastic Mesh Canvas Sheet

- Bathroom Heater, Fan Combo

- Portfolio Binder With Clipboard

- Marina Overlay Jumpsuit

- Delta Industrial Table Saw 36-650

- Grandview Mushroom Farm

- Middlesex County Hazardous Waste Disposal

- Straw Sombrero Cowboy Hat

- Front Deep Neck Design

- Timeshares For Sale Spain

- 3 Inch Thermal Paper Roll

- 21c Bentonville Restaurant

- Cartwright Hotel Breakfast

- Date Night Dress Plus Size

- Telescoping Security Camera Pole